LLM use case: Reducing Customer Success response times by 50% with RudderStack, kapa.ai, and Thena

At RudderStack, our main mission is helping our customers turn customer data into a competitive advantage and we’ve been diving deep into how LLMs can support this mission. In our previous post, we shared our own journey with LLM integration, showcasing a few prototypes and discussing the questions we’re asking and challenges we’re tackling. Building on these insights, we’re eager to share how we’ve utilized large language models (LLMs) to help make our technical support team more efficient.

Our Customer Support team, split into Customer Success Managers (CSMs) and Technical Account Managers (TAMs), plays a crucial role in helping clients use our tools, implement use cases, and resolve any issues they face. Our CSMs focus on overall client health and provide strategic advice, while the TAMs tackle the nitty-gritty technical questions and support tickets.

Balancing efficiency and quality in the world of CS is like walking a tight-rope. To help our CSMs and TAMs manage their workload and improve response times, we built an LLM workflow using RudderStack, kapa.ai (an AI tool that learns from technical documentation, then provides a question-answer interface for developers), and Thena (our support ticketing system).

How we built an LLM-powered workflow to increase the speed and quality of support responses

Our TAMs are both knowledgeable and technical, but they haven’t memorized every use case and detail from our documentation, or every detail about every customer. That means that they often have to manually retrieve information—both about our product and the customer—in order to provide the best response to questions. The time it takes to manually look up information from different systems (docs, Salesforce, Thena) adds up, and can cost hours of a TAM’s time in a given day, significantly decreasing efficiency.

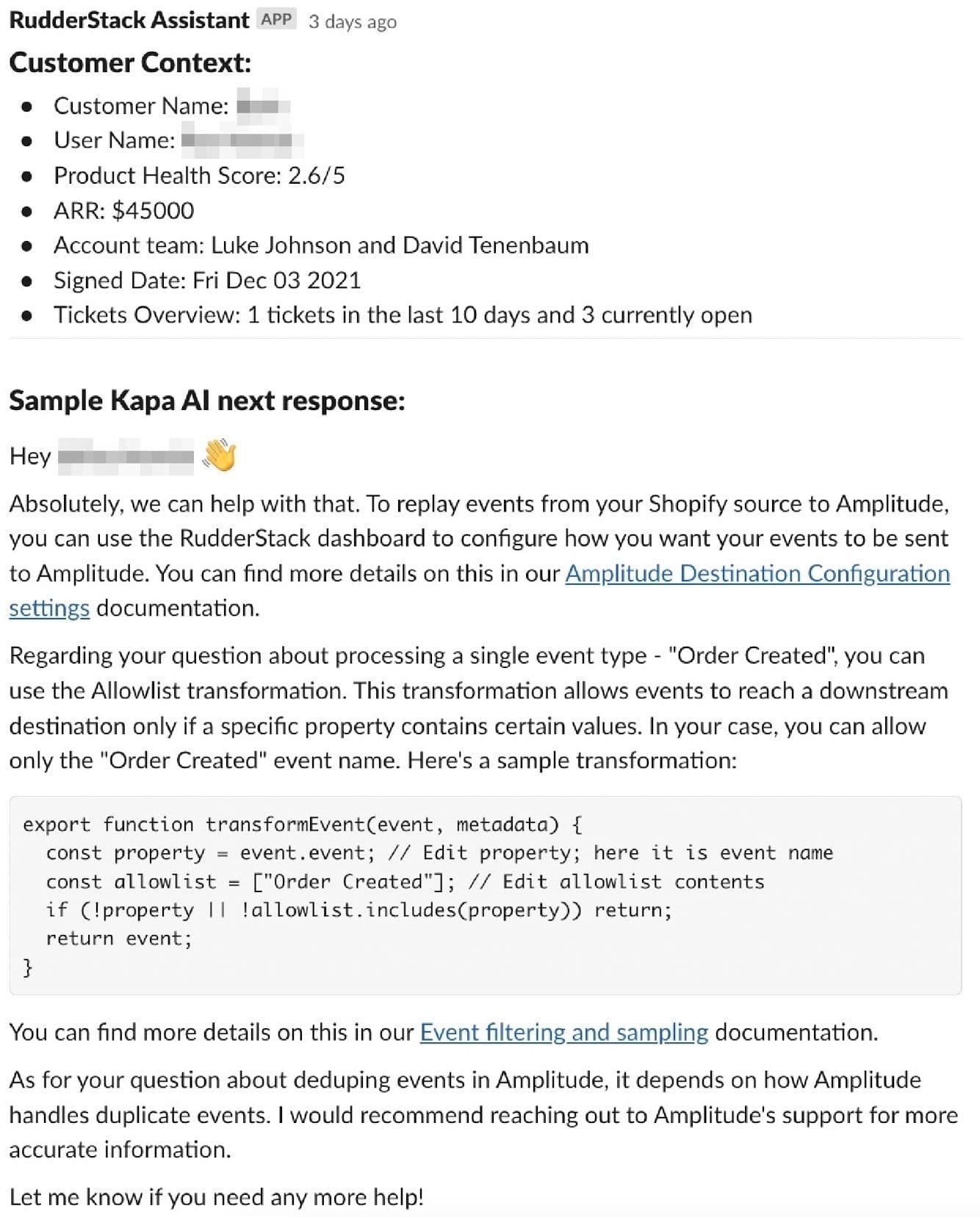

Our TAM leader, David Daly, was determined to figure out if we could eliminate that inefficiency. Starting with an internal POC, we ultimately built out an API-driven pipeline that has made our TAMs 50% more efficient. Here’s an overview:

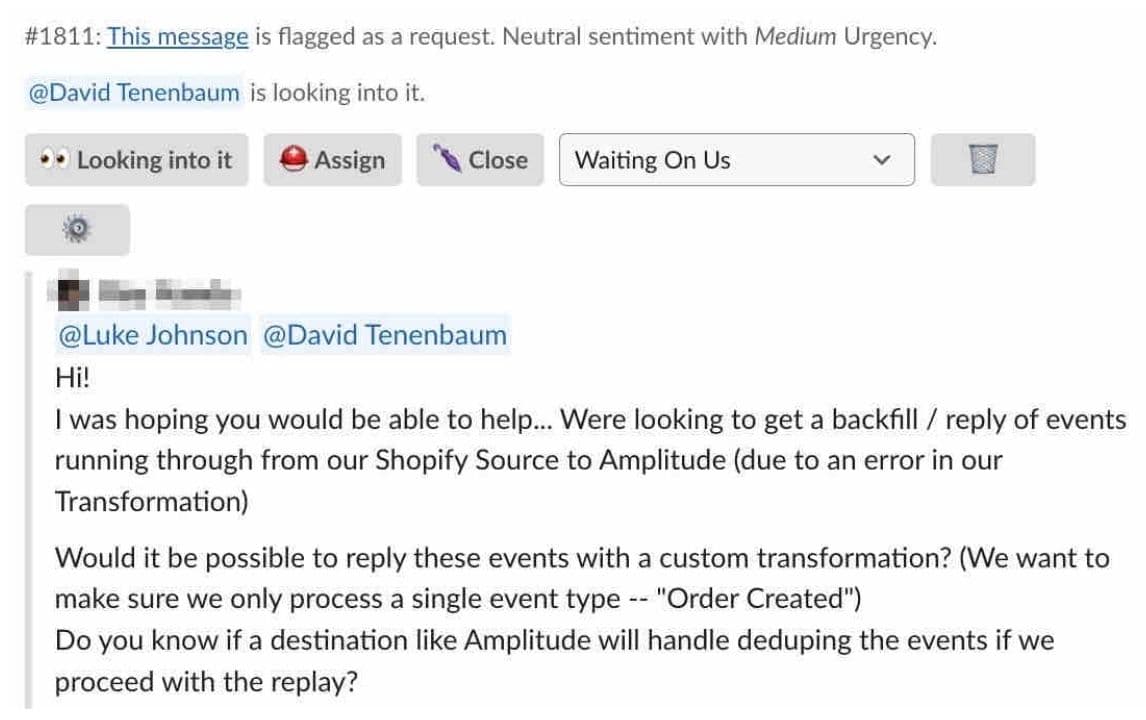

- Customer questions via Slack create tickets in Thena

- Thena tickets (questions) are routed to a RudderStack webhook source

- Using RudderStack Transformations, we ping our own internal customer 360 data set in real time via the Activation API to pull relevant information about the customer

- Using RudderStack Transformations, the kapa.ai API is pinged with the question and customer context, and a technical response is returned and appended to the payload

- The enriched payload, complete with an answer and customer details, is routed back to the TAM in Slack via a RudderStack webhoook destination

Dig in below for the full details on each step of the build.

Phase 1: Accurate answers for internal queries without all the manual lookup

To launch our initial POC, we first set up our comprehensive knowledge base in Kapa.ai, configuring the content sources for our Kapa bot to include technical documentation, FAQs, and troubleshooting guides, covering everything from implementation steps to detailed technical information. The next step was to set up the Slack integration, making it easy for our Customer Support team to submit queries to kapa.ai directly in Slack. When RudderStack team members have technical questions, they post them in our Kapa Slack channel, which taps into this extensive knowledge base and provides answers and allows our team to give feedback to the LLM to improve answer accuracy over time.

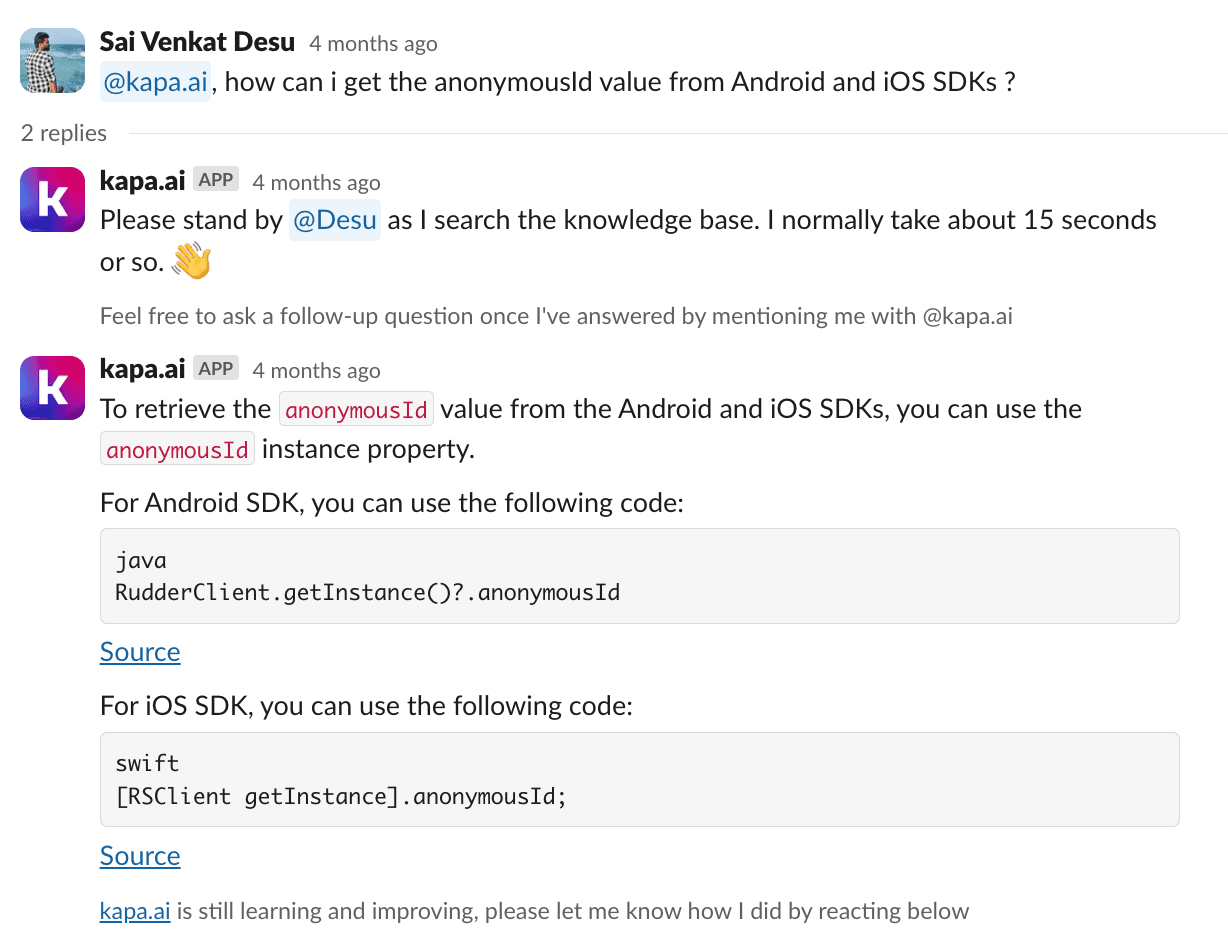

This meant no more sifting through docs or constant DMs to subject matter experts. Here’s an example of how our team uses this internal resource to answer technical questions:

By cutting out the manual search, our TAMs could handle customer queries faster and more accurately, transforming our internal support into an even more well-oiled machine.

“Across the data and web teams, we shipped this project in less than 5 days, which was incredibly fast based on my experience. At past companies, it took us many months to build out real-time use cases like this, and even then they were hard to scale and maintain. With this setup, we can deploy new tests for marketing in a few hours.”

Gerlando Piro, Head of Web Presence at RudderStack

This initial use case was a huge success, but as our client list grew, so did the number of support tickets – it was time to build a deeper integration with Thena, our support ticket system, and put together an integrated pipeline from ticket to TAM.

Phase 2: building an LLM-driven support ticket answer app

Getting fast answers to questions from kapa.ai was great, but our TAMs were still manually copying customer questions over to the kapa chatbot. Also, the TAMs were still having to manually retrieve important information about customers, like their plan type, number of recent support tickets, etc.

In the second phase of the project, we had two goals:

- Automate the question/answer process as a pipeline

- Enrich the answers with helpful details about the customer

Here’s how we built it:

Step 1: Customer Interaction

One of our customers pops a question into our shared Slack channel. They might ask something like, “How do I set up a new data pipeline using your API?” or “Why is my data sync taking so long?” These questions can range from simple setup instructions to more technical issues.

Step 2: Ticket Creation

As soon as their question lands in Slack, Thena, our CS system, automatically creates a support ticket based on their query

Step 3: Data ingestion and enrichment pipeline

Once the ticket is created in Thena, RudderStack steps in. We pick the ticket up via a Webhook Source, (a method that allows real-time data transfer between systems) and enrich the data by pinging two key APIs:

Activation API: We use RudderStack Profiles to create and manage our customer 360 data. RudderStack’s Activation API makes all of that data available in real-time, via API. This makes it easy for us to use Transformations to ping the API, look up a customer, pull down relevant details, and append them to the payload. Here’s a code sample from the Transformation:

JAVASCRIPT

kapa.ai API: Next, we pass both the customer information and question to the kapa.ai API to generate a response. Here’s a code sample from the Transformation:

JAVASCRIPT

Step 4: Routing the enriched response to TAMs in Slack

At this point in the pipeline, the payload has passed through the enrichment Transformation and contains both customer context as well as the answer to the customer question. The last step is routing the enriched payload back into Slack. We use a RudderStack webhook destination to push the message directly to the TAM assigned to the account.

The TAM first provides human-in-the-loop review of the technical answer to mitigate hallucination (LLMs are amazing, but not perfect, and accuracy is critical for production data pipeline questions).

TAMs also review the customer context in order to catch any situations that might need more attention. For example, if a customer has a low product health score and multiple recent support tickets open, the TAM might want to ping the CSM on the account and let them know they should set up a check-in meeting.

50% more efficiency in TAM response times

This internal CS app has made our TAMs 50% more efficient in responding to technical questions from our customers, which is a drastic improvement. In fact, this Kapa workflow has automated ~70% of responses to our most common queries.

With an LLM handling the baseline workload, our TAMs have far more bandwidth to provide deeper value to our customers, troubleshooting more complex issues and helping them implement new use cases.

Build your own LLM apps with RudderStack

If you’re interested in building a similar LLM-based use case with your customer data, please reach out. We’d love to hear about your use case and share more about what we’re working on.

We’ve got more LLM apps in the works, so follow us on LinkedIn to get updates.

Published:

June 13, 2024

Understanding event data: The foundation of your customer journey

Understanding your customers isn't just about knowing who they are—it's about understanding what they do. This is where clean, accurate event data becomes a foundational part of your business strategy.

How AI data integration transforms your data stack

This article breaks down what AI data integration means, explores key benefits like faster time-to-insight and improved data quality, and walks through practical use cases and implementation strategies.

Data automation: Tools & tactics for automated data processing

With the right automation tools, you can eliminate hours of tedious work while dramatically improving data accuracy and availability. This article explores how data automation works and how it can transform your approach to data management.