How to use LLMs for customer data: Two prototypes

The recent wave of innovation in generative AI has transformed machine learning and is changing the way we work. As technologists, LLMs are giving us new ways to solve problems and deliver customer experiences that were hard to imagine a few years ago.

On a recent episode of The Data Stack Show, Continual Founder and CEO Tristan Zajonc articulated this well:

“This last wave of Gen AI is a huge transformation. It's actually not a continuation, I think, from the traditional MLOps world. It completely changed the capabilities of these models. We were all talking about things like forecasting and classification and maybe little bits of optimization. Now, we're thinking about generating some of the most creative texts that you could imagine, or images. We've opened up a huge new set of potential application possibilities.“

Some use cases for LLMs are obvious and have produced notable results. Klarna’s AI customer support chat and Github’s Copilot coding assistant are great examples.

At RudderStack, we build tooling for customer data. We’re thinking deeply about how LLMs can help our customers turn their data into competitive advantage and how RudderStack can make that easier. In this post, we’ll share how we’re thinking about integrating with LLMs and show you a few prototypes we’re working on to explore these ideas. We’ll also detail some of the challenges we’re still actively solving as we experiment.

How we’re thinking about LLMs and customer data

The first wave of impact from LLMs has been transformational operational efficiency for knowledge workers. In many cases, LLMs can completely automate previously time-consuming work like information summary and discovery and content generation (text, images, audio, and even video). Individuals leverage tools like ChatGPT heavily for this kind of work, but SaaS tools are also adding operational efficiency to their products. For example, Gong, a call recording service, uses LLMs to summarize sales call transcripts and make them easier to search.

When it comes to incorporating LLMs into SaaS products, it’s tempting to ride the hype wave and force an LLM endpoint into your product experience. There are obvious wins, like content generation in email platforms, code generation in analytics platforms, and LLM-based summary, search, and discovery (in tools like Gong). But it’s not that simple for every company because not every SaaS tool directly supports operational areas where LLM strengths can quickly make an immediate impact.

When it comes to customer data, most LLM use cases address problems that have existed for a long time. Their main value is making these tough problems easier and cheaper to solve.

Klarna’s case study is a great example: providing excellent customer support with maximum efficiency is a goal for almost every business, but piecing together the data, technology, and human components into a streamlined, high-quality, and cost-efficient function is a massive undertaking. LLMs can sit in the middle of that Venn diagram and help connect the various components in a way that was previously inaccessible to most companies because of the cost in both time and money. Specifically, Klarna’s AI bot does the work of around 700 humans, is 25% more accurate than humans, and can automate support in over 35 languages. Previously, it was possible for Klarna to build that technology, but it wasn’t practical. LLMs have made it both economically practical and technologically accessible.

Narrow data, wide data, and the foundation underneath

Klarna’s use case focuses on a very specific type of customer data (support tickets). We’ll call these use cases, where the input is one particular type of customer data, narrow data use cases. Other examples include analyzing chat transcripts for sentiment or having a bot answer questions about a static dataset. We recently introduced an Ask AI beta on our docs site (more on this later).

Many use cases, though, are more complex because they require a comprehensive set of data. We’ll call these wide data use cases. Recommendations are a good example. You could take a narrow approach to making recommendations, but the experiences that convert the best are fed with context from the entire customer journey.

With narrow use cases, you can get away with cleaning a particular, narrow data set and achieve success (i.e., feed an LLM with 10s of thousands of support tickets and resolutions). This doesn’t hold for wide data use cases. They require you to solve a host of additional challenges upstream in order to feed the LLM with the right data. For customer data, this most often means identity resolution, customer segmentation, and feature development. What’s new is old: this is a tale as old as time in “traditional” machine learning.

In practical terms, having comprehensive data doesn’t change how you interact with an LLM. It’s still input/output. The difference is that you’re giving the LLM inputs computed on top of a complete, curated customer data set. For example, if you want to recommend (and cross-sell) a new product category to active, high-LTV loyalty program members, you have to solve the following problems:

- Identity resolution (to join user data from all tables across the warehouse)

- Loyalty program membership status

- LTV computation

- Known category interest (i.e., have purchased or viewed categories X or Y)

- Recent activity (have interacted on web, mobile, in-store, etc.)

In the above use case, the input for the LLM could be simple – a list of categories for each user. But building that list is a monumental task.

Dare we say that asking an LLM for a category recommendation is easy compared with all the work involved in creating the category interest list required to ask for that recommendation?

RudderStack and LLMs

It’s easy to say, “Feed an LLM with reliable, complete customer profiles and get better results,” but anyone who has tried to build an actual use case knows there’s much more to it. You have to answer a multitude of tricky questions:

- What kind of LLM are you using?

- How exactly do you feed it?

- What do you feed it?

- What infrastructure do you use to create the pipelines required to operationalize the use case?

- How do you build the right prompt and handle hallucination? (See the end of the post for more thoughts on these challenges)

Like everyone else, we’re still figuring out the best answers to these questions. We’re actively working on them and dogfooding RudderStack to learn how to best leverage the power of LLMs as part of the customer data stack. Here are three specific experiments we’re working on:

- Adding customer 360 context to narrow data use cases

- Simplifying LLM input development to create 1-1 content recommendations

- Integrating LLM workflows into existing customer data pipelines

Below, we’ll provide an overview of each of these experiments, including details about the data flows for each prototype.

Adding customer 360 context to narrow data use cases

Narrow data use cases are more efficient and more powerful when you package LLM responses with all of the relevant customer context. For example, you can ask an LLM to respond to a support request, summarize product feedback, or analyze a QBR transcript, but without context, the value of the response is limited. A business user must piece together the context themselves, which they could do by logging into various tools to retrieve information. However, this takes time and often still results in a fragmented picture (what was the name of that field again?).

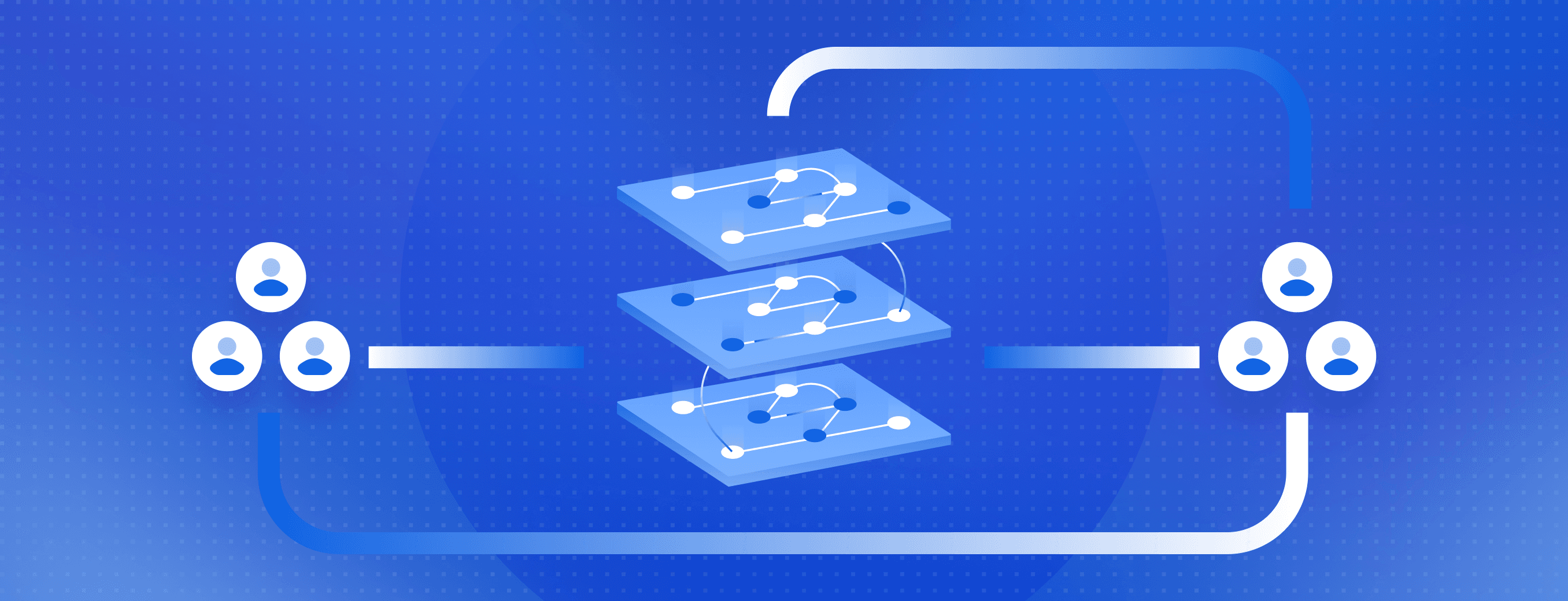

At RudderStack, we’re experimenting with pairing RudderStack Profiles with an LLM as part of our support ticket flow to increase the efficiency of our Technical Account Management team.

Prototype: using RudderStack, Thena, and kapa.ai to make Technical Account Managers more efficient

We use Kapa.ai to create an LLM-driven, self-serve technical question-answer experience in our docs. We also use the same LLM to automate answers to technical questions that come from our customers in shared Slack channels.

Here’s the basic flow:

- A customer asks a question in a shared Slack channel

- Thena (our customer support system) automatically creates a ticket

- RudderStack picks up new Thena tickets via a Webhook Source

- Using Transformations, we ping two APIs to augment the ticket payload:

- We route the question to Kapa’s API to get a response from the LLM

- We route the customer ID to our own Activation API to pull down information from our internal account-level customer 360 table. This includes customer health score, number of recent tickets, number of open tickets, and other context.

- We package the customer context and LLM response into a single payload

- We push the final payload to a Slack channel where the Technical Account Manager can triage it

We’re working out some kinks with the prompt to get responses in a more consistently helpful place, but when it works well, our TAMs get a considerable efficiency boost because they can quickly review an answer for accuracy and then decide how to deliver it to the customer. For example, they may be able to provide additional helpful information or offer a meeting if a customer has a low product health score.

Stay tuned for a detailed breakdown of this use case in the coming weeks.

Simplifying LLM input development to create 1-1 content recommendations

Returning to our wide data use case example from above – making a product category recommendation – let’s imagine how you might prompt an LLM to get a recommendation for a single user.

You might feed the LLM with the following data:

- A list of all product categories for your company

- A list of the best-selling or highest-margin categories

- A list of the product categories for the customer’s past purchases

- A list of the categories most recently viewed by the customer (over some time period, say 30 days)

The first two items in that list probably exist in a BI dashboard or spreadsheet. The second two inputs are where things get tricky because you first have to model your data in a way that allows you to see both past purchases and recent browsing behavior for this customer – and those are just two customer features out of hundreds that business teams use.

Our Profiles product drastically simplifies the development of these types of user or customer-level inputs for LLMs:

- Profiles generates an identity graph that serves as a map for where a user’s data lives across all of your customer data tables in your warehouse.

- Profiles makes it easy to compute complex features, like a list of the product categories for a single user’s entire purchase history.

We’re using this same methodology to create content recommendations for our users. Here’s how.

Prototype: using RudderStack Profiles and OpenAI to generate 1-1 content recommendations

We have great content spread across our marketing site, blog, documentation, and other sources, but it can be hard to surface the most relevant content for each user. Because our site is deep, it can also be hard for users to discover the most helpful content on their own, especially if they are time-constrained.

LLMs are an excellent solution for recommending content based on predefined inputs, and Profiles makes it really simple for us to compute user-level recent browsing history.

Here’s the basic flow:

- Write SQL to create a simple table of the top 100 pieces of content by unique views (this is a simple join across a few PAGES tables from different SDK sources like docs, marketing sites, etc.)

- Resolve user identities through Profiles’ identity resolution

- Use Profiles to compute recent browsing history for each user (i.e., pages visited within the last 14 days)

- Use Profiles to feed the LLM the inputs (top 100 pages and recent browsing data) and ask it to recommend the top 3 pieces of content for each user (this runs as part of the Profiles job)

- Append the recommended content to each user profile as a feature (this also runs as part of the Profile job)

- Reverse ETL the recommended content to Customer.io to use in a campaign

- Send automated emails with the recommended content as part of the email body

We could certainly write SQL to pull together basic pageview data for a narrow data use case, but the reason we’re backing this project with Profiles is that we want to scale the experiment in terms of both customer segmentation (by targeting more complex cohorts) as well as the types of recommendations (i.e., PDF case studies and whitepapers VS website pages).

In addition to drastically simplifying the user-level feature computation, scaling LLM use cases can move significantly faster when backed by Profiles.

Stay tuned for a full architecture for this use case and results from the email campaign.

Integrating LLM workflows into existing customer data pipelines

One shared characteristic of each prototype above is that the LLM use case runs inside of an existing customer data pipeline, whether that’s ticket routing via event streaming or a customer 360 modeling pipeline via RudderStack Profiles.

This is important enough to call out on its own: one of the primary ways RudderStack helps companies leverage the power of LLMs is streamlining the workflow itself, eliminating the need to set up separate infrastructure for AIOps. We still have some work to do on the product front here, but it’s the same approach we used for our python-based predictive features.

Stay tuned for some upcoming details on how we’re building native workflows in Snowflake Cortex.

…but LLMs aren’t magic, and there are some significant concerns to work out

We’re excited about the powerful use cases for LLMs and customer data, but anyone who has tried to build a production LLM app knows it’s hard to get right once you move beyond the most basic use cases. Here are the challenges we’re facing with our prototypes and how we’re thinking about them.

Hallucination

Hallucination creates real risk, and it’s difficult to spot or solve at scale.

We recently rolled out an “Ask AI” beta on our docs site through kapa.ai. It’s an awesome tool and has helped us move much faster than we could have on our own, but that doesn’t mean we haven’t had to put work in to make it successful. We’ve invested significant time monitoring the percentage and severity of uncertain answers, correcting hallucinations, and, most importantly, fixing outdated and incorrect data in our docs (Kapa’s tools for updating embeddings are great, by the way). And that’s on a highly structured, slowly changing dataset, with the primary changes being new content.

We’re actively iterating and experimenting with different solutions here, and we’re keeping a close watch as we do because if a hallucination tells one of our users to do the wrong thing, they could corrupt or lose customer data, which would be a critical problem (hence the beta flag and disclaimer).

When you think about customer data use cases, many of them involve some sort of customer experience or content (like our prototypes above), but if you’re generating that at scale for a considerable number of users, it’s nearly impossible to QA for hallucinations, meaning you run the risk of sending customers incorrect or unhelpful information. This risk is a big reason we’ve started with either human-in-the-loop flows (for our support ticket prototype) or low-risk personalization (recommending web pages).

Prompting is hard

Even with clean, comprehensive data and well-defined inputs, the LLM must be given a prompt. Getting these right is tricky.

Generating a funny image with DALL-E is easy. Grading a sales engineer’s hour-long product demo transcript against 25 criteria is hard.

We’ve taken multiple deep dives into prompt engineering, even creating chains of LLMs to progressively develop prompts for highly specific use cases. The reality is that prompt engineering is both a key factor in the quality of LLM output and a tough thing to do well for complex use cases, across a wide data set, at a user level, at scale.

From a product perspective, this is a big question: how much of the prompt do you productize? How much productization is possible, given the differences in business models, datasets, and use cases? Does productization even make sense when the prompt ecosystem is already robust (if fragmented)? What is the “prompt jurisdiction” between us as an LLM use case enabler and the customer implementing the use case?

GPT is free and easy. AI infrastructure with a feedback loop isn’t.

MLOps has been around for a long time and offers clear methodologies for creating a feedback loop that you can leverage to improve model accuracy.

GPT makes AI seem like free magic, but the subjective nature of the examples above create a challenging engineering and data science ops problem. How do you detect hallucinations? How do you fix them? How do you improve the model over time?

Like any infrastructure, there are managed services to make those things easier, but there’s quite a bit of distance between pinging GPT and running a production RAG application at scale. We’re actively building on Snowflake Cortex and similar technologies to help solve these problems, but there’s still a ways to go to establish enterprise-scale infrastructure for feedback loops as they relate to customer data.

Run your own LLM experiments with RudderStack

We’re just scratching the surface of possibility with these LLM customer data use cases, and we’re already impressed with the results. Things will only get better as models improve and we build more efficient processes.

If you’re working on similar applications for generative AI, please reach out. We’d love to hear about your learnings and share more about what we’re working on. We’ll be posting deeper dives into each of the prototypes we covered here in the coming weeks, so follow us on Linkedin to get updates.

Start building your own LLM use cases today

Sign up for RudderStack to experiment with your own customer data + LLM use cases

Published:

May 23, 2024

Understanding event data: The foundation of your customer journey

Understanding your customers isn't just about knowing who they are—it's about understanding what they do. This is where clean, accurate event data becomes a foundational part of your business strategy.

How AI data integration transforms your data stack

This article breaks down what AI data integration means, explores key benefits like faster time-to-insight and improved data quality, and walks through practical use cases and implementation strategies.

Data automation: Tools & tactics for automated data processing

With the right automation tools, you can eliminate hours of tedious work while dramatically improving data accuracy and availability. This article explores how data automation works and how it can transform your approach to data management.