DATA GOVERNANCE

CDPs in 2026? Delivering trustworthy customer context for AI

by Soumyadeb Mitra

DATA GOVERNANCE

AI is a stress test: How the modern data stack breaks under pressure

by Brooks Patterson

DATA GOVERNANCE

Data trust is death by a thousand paper cuts

by Soumyadeb Mitra

All

RudderStack Updates

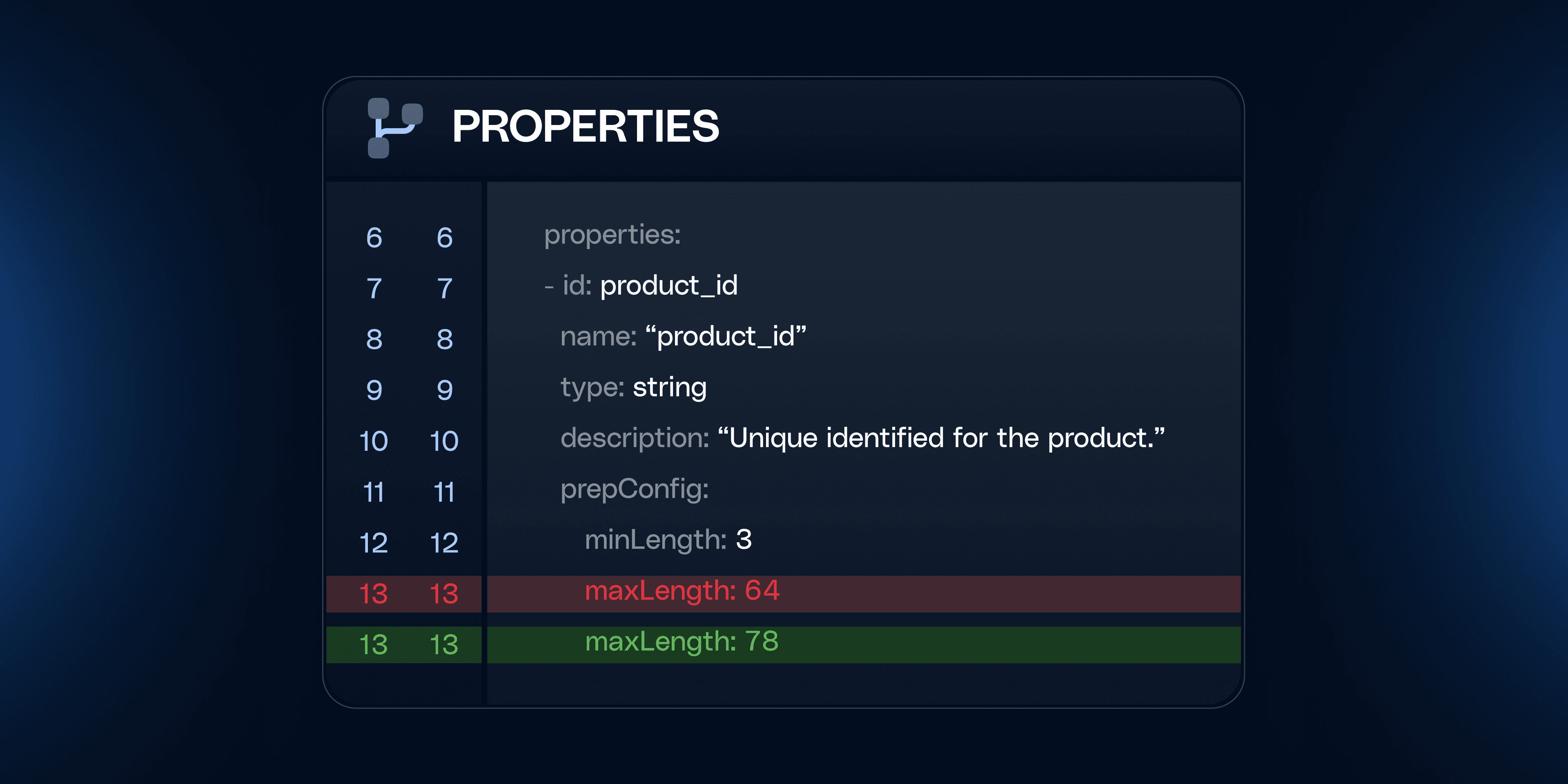

New IaC-driven governance supports trustworthy customer context

Mackenzie Hastings

by Mackenzie Hastings

RudderStack Updates

Beyond the modern data stack: The customer context engine for the AI era

Brooks Patterson

by Brooks Patterson

Data Infrastructure

What is Apache Airflow? A guide for data engineers

Pradeep Sharma

by Pradeep Sharma

Data Enablement

Mobile measurement partners: What they are and how to choose

Danika Rockett

by Danika Rockett

Data Infrastructure

From product usage to sales pipeline: Building PQLs that actually convert

Soumyadeb Mitra

by Soumyadeb Mitra

Data Governance

CDPs in 2026? Delivering trustworthy customer context for AI

Soumyadeb Mitra

by Soumyadeb Mitra

Identity Resolution

What is entity resolution? Use cases and best practices

Pradeep Sharma

by Pradeep Sharma

Data Infrastructure

How we track multi-agent AI systems without losing visibility into agent orchestration

Anudeep

by Anudeep

Data Governance

AI is a stress test: How the modern data stack breaks under pressure

Brooks Patterson

by Brooks Patterson

Start delivering business value faster

Implement RudderStack and start driving measurable business results in less than 90 days.