Introducing Rudder AI Reviewer: Catch bad tracking before it ships

Rudder AI Reviewer brings automated instrumentation review directly into your GitHub PRs, so bad tracking doesn't make it to production.

We've talked a lot this year about what it means to build trustworthy customer data infrastructure for the AI era. About how AI agents are only as reliable as the context they're given. About how broken data, once tolerable when it just skewed a dashboard, now becomes a scaled liability when it's powering customer-facing systems that act without direct supervision.

But there's a problem we don't talk about enough: Bad data starts at instrumentation time, not at the warehouse.

By the time a malformed event reaches your analytics tools, your warehouse, or your AI models, the damage is already done. The event fired. The page view was tracked. The conversion was missed. And no amount of downstream cleanup fully recovers from upstream capture errors.

The fix isn't better monitoring after the fact. It's better enforcement at the source, and that means your code review process.

Tracking code is code. Treat it that way.

When your team ships a new feature, the tracking code typically lives in the same PR as the production code. Unfortunately, instrumentation decisions (event names, property schemas, and how they align to the tracking plan) rarely receive the same level of PR scrutiny as application code. They are made quickly, reviewed by folks focused on the feature functionality rather than data design, and merged without a consistent, systematic validation layer.

The result is familiar: checkout_started in one place, CheckoutStarted in another, and checkout_begin somewhere else. Three names for the same event, scattered across your codebase over six months of shipping. Your analyst's query breaks. Your marketing team's funnel is wrong. Your AI model trained on this data? It's learned a fragmented view of your customer journey.

This is the kind of thing that's obvious in hindsight and almost invisible in the moment of a PR review.

Introducing Rudder AI Reviewer

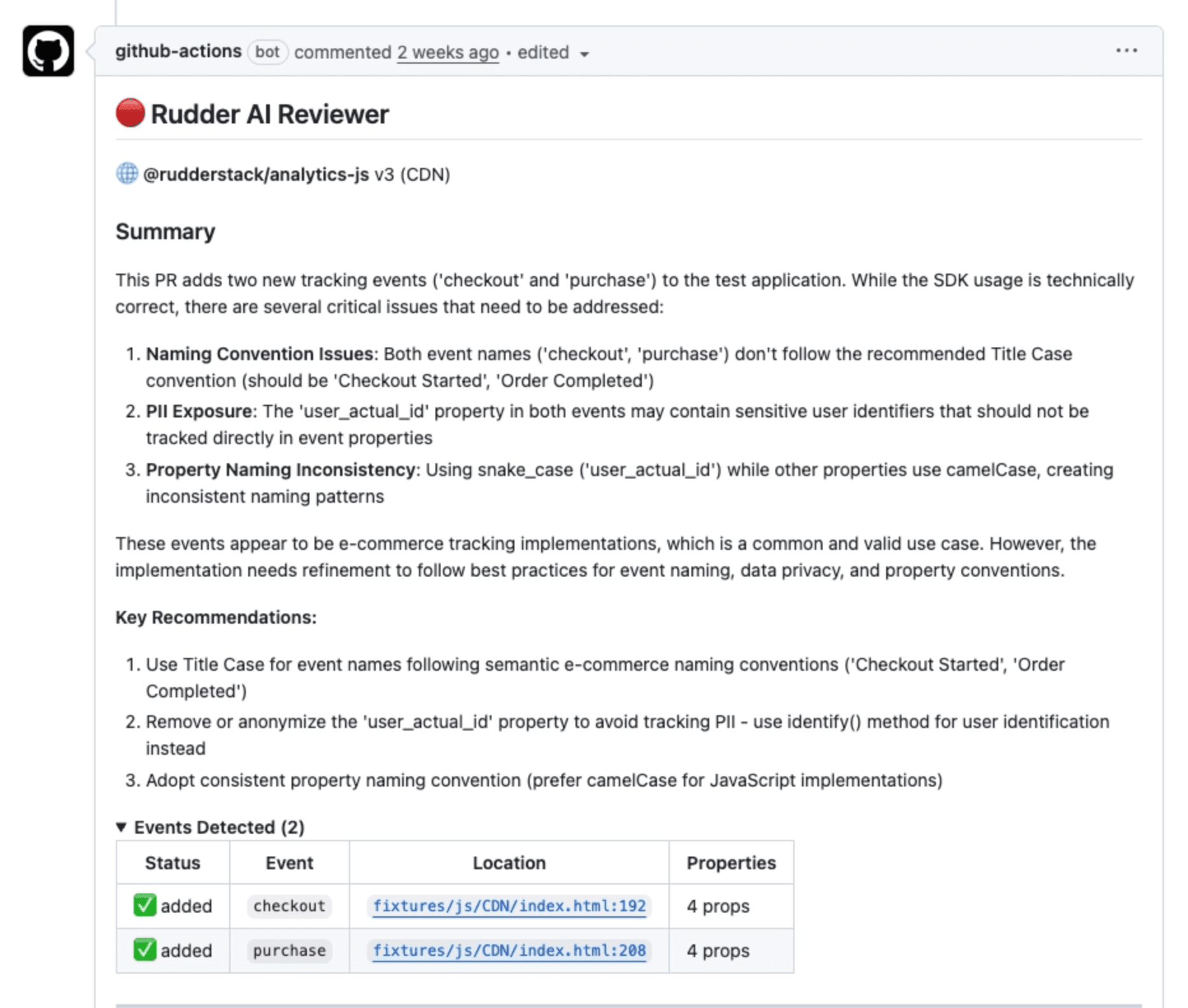

Rudder AI Reviewer is a GitHub Action that automatically reviews pull requests for RudderStack instrumentation quality. When a developer opens or updates a PR that touches tracking code, the Reviewer analyzes the changes and posts its findings directly in GitHub, both as inline comments on specific lines of code and as a summary comment on the PR itself.

The Reviewer checks for three categories of issues:

1. Tracking plan compliance. If you've defined a tracking plan in RudderStack, the Reviewer validates new and modified events against it. Events that don't exist in your plan, properties with wrong types, required fields that are missing—all of these surface as PR comments before anything merges.

2. Best practices. RudderStack has a well-defined event spec and a set of instrumentation patterns that teams often get wrong. The Reviewer flags these: inconsistent event naming conventions, missing userId or anonymousId, calling identify without a track, and similar structural issues that make your data harder to use downstream.

3. Event name fragmentation. This one is subtle and almost never caught in human review. The Reviewer detects when a new event name is too similar to an existing one, flagging potential duplicates before they compound into the kind of naming debt that takes months to clean up.

How it fits into your workflow

Setup is a single GitHub Action configuration in your repository. Once enabled, every PR that touches instrumentation gets automatically reviewed. Your developers see the feedback in the same place they see linting errors and test failures in the PR itself, on the specific lines that need attention.

Rudder AI Reviewer summary comment

The experience looks like a code reviewer who knows RudderStack deeply: inline comments explaining what's wrong and why, with a summary at the top of the PR that gives reviewers and authors a quick read on the overall instrumentation health of the change.

Human reviewers still approve and merge. The Reviewer handles the RudderStack-specific checks they'd otherwise miss or have to look up manually.

This is what governance at the source looks like

We've been investing heavily in infrastructure-as-code for data governance. This includes code-based tracking plans, CI-driven validation, version-controlled data catalogs. The philosophy is the same: governance works best when it's automated, systematic, and enforced early in the development lifecycle, not patched in after the fact.

Rudder AI Reviewer is a direct expression of that philosophy applied to the instrumentation layer. It's governance baked into the developer workflow, not bolted on after bad data has already shipped.

The bar for data trust has never been higher. AI systems that act on your customer data need clean inputs, governed from the moment of capture. That starts with the PR.

Rudder AI Reviewer is in public beta. Check out the setup guide to get started, or reach out to the team if you want to talk through how it fits into your stack.

Published:

February 19, 2026