Feature launch: Transformations for real-time schema fixes

Bad data causes far-reaching problems. If dirty data hits your warehouse and gets propagated downstream, it can touch every part of your company. Consequences range from broken dashboards before board meetings to compromised customer experiences because of incorrect data. The worst-case scenario is strategic missteps based on inaccurate analysis. Bad data compromises data trust, and if you can’t trust your data you can’t be a data-driven company.

Tracking Plans enable you to catch violations and quarantine or drop bad data before it gets downstream. But without remedying the bad data, you’re left with an incomplete dataset and limited ability to deliver powerful customer experiences.

Today, we’re introducing real-time schema fixes with Transformations so you can fix bad data while maintaining an efficient workflow. This feature is part of our Data Quality Toolkit, a set of capabilities that help you guarantee quality data from the source so you can spend less time wrangling and more time helping your business drive revenue.

Bad data fixes without the headaches

When data violates tracking plan definitions, most teams are stuck with two painful options:

- Work with software engineers to update instrumentation and implement a fix. This requires websites and apps to be redeployed, subjecting your fix to dev cycles that involve testing/staging and potentially lengthy deployment processes.

- Let the event into the warehouse and fix the problems in post with SQL or Python. This can result in hundreds (or thousands) of edge cases over time. It also requires additional integrations and pipelines (and cost) to replay the cleaned data back into downstream systems.

What‘s needed is a way to identify violations and fix them in real time when they are caught. With RudderStack Tracking Plans and Transformations you can do just that.

Transformations is a code-based feature that lets you manipulate and enrich your event payloads in real time. When Tracking Plans flags violations, you can use Transformations to fix schema errors and even preserve warehouse schemas used for critical reporting. You can apply your fixes globally or customize them for each destination. Because Transformations occur in pipeline – after collection and before delivery – you don’t have to worry about submitting a dev ticket. You can fix bad data without redeploying sites or apps, an especially critical benefit during code freezes.

As with all things at RudderStack, we built Transformations with data teams in mind. You can write your fixes in JavaScript or Python and version control the code with your existing dev workflow.

Ensuring data quality with Transformations

Violations can occur for a variety of reasons. Using Tracking Plans, you can enrich your events with violation information. Then, you can use Transformations to correct violations quickly before they impact your stakeholders downstream. Here are a few examples of how you can use Transformations to ensure data quality.

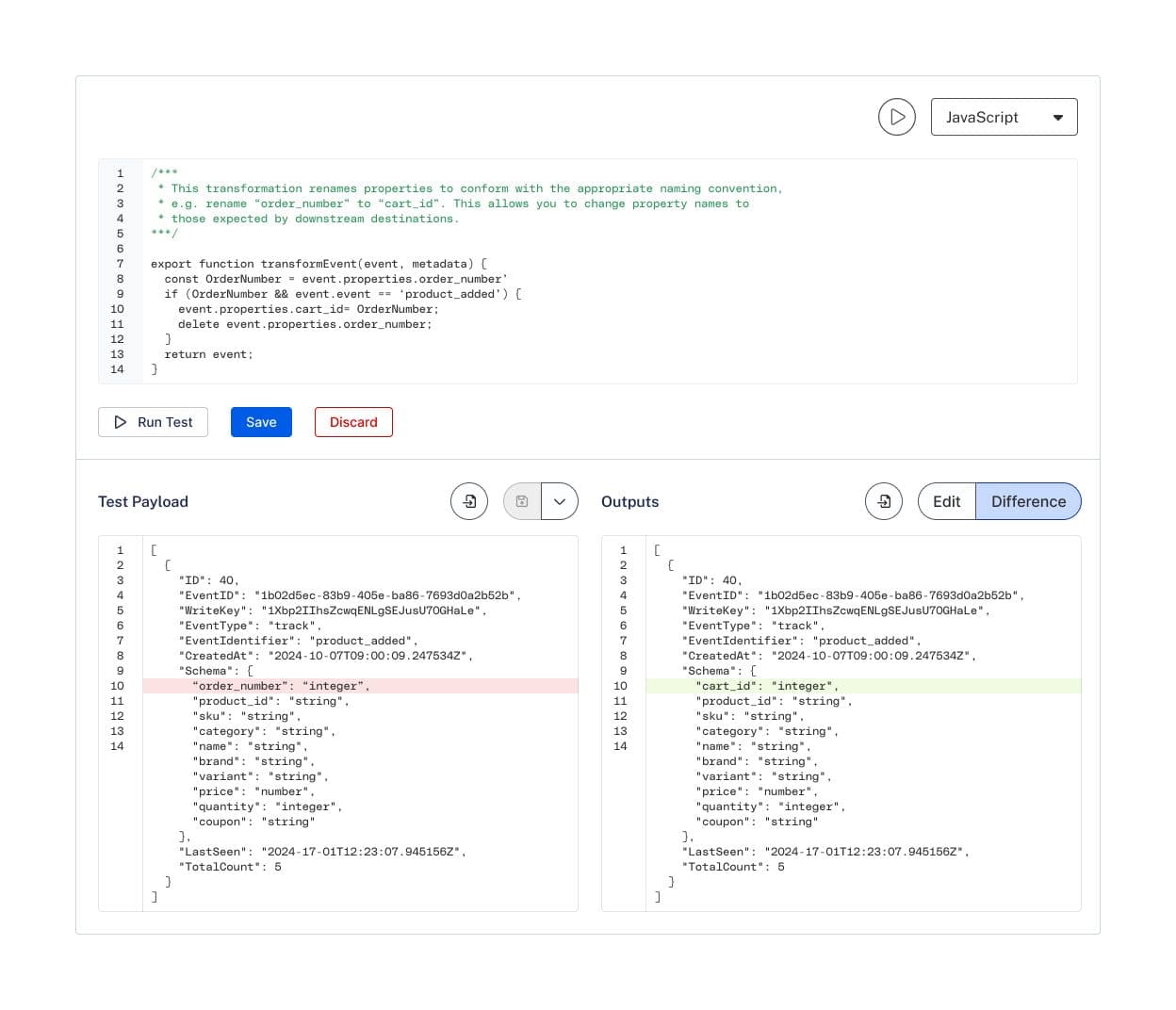

Change property and trait names: Whether it’s a mismatch in your destination, mistaken instrumentation, or marketing changed a field name all of a sudden, Transformations enable you to rename event fields without opening a dev ticket, so you can ensure the data matches the destination schema before it gets there.

JAVASCRIPT

Convert data types: Sometimes a 1 isn’t a 1. If you add a new destination that requires a different data type than you originally instrumented – your payload includes the number ‘1’ while the new destination requires the integer ‘one’ – Transformations make it easy to handle. You can write a destination-specific transformation to convert the data type to integer for the new destination while leaving it as a number for all other destinations, including your warehouse.

Reformat payload structures: You can use Transformations to reshape a payload in a situation where you need to simplify and flatten the structure. For instance, if you have an array of products that’s deeply nested, you can change it with a Transformation to make each attribute a top-level field.

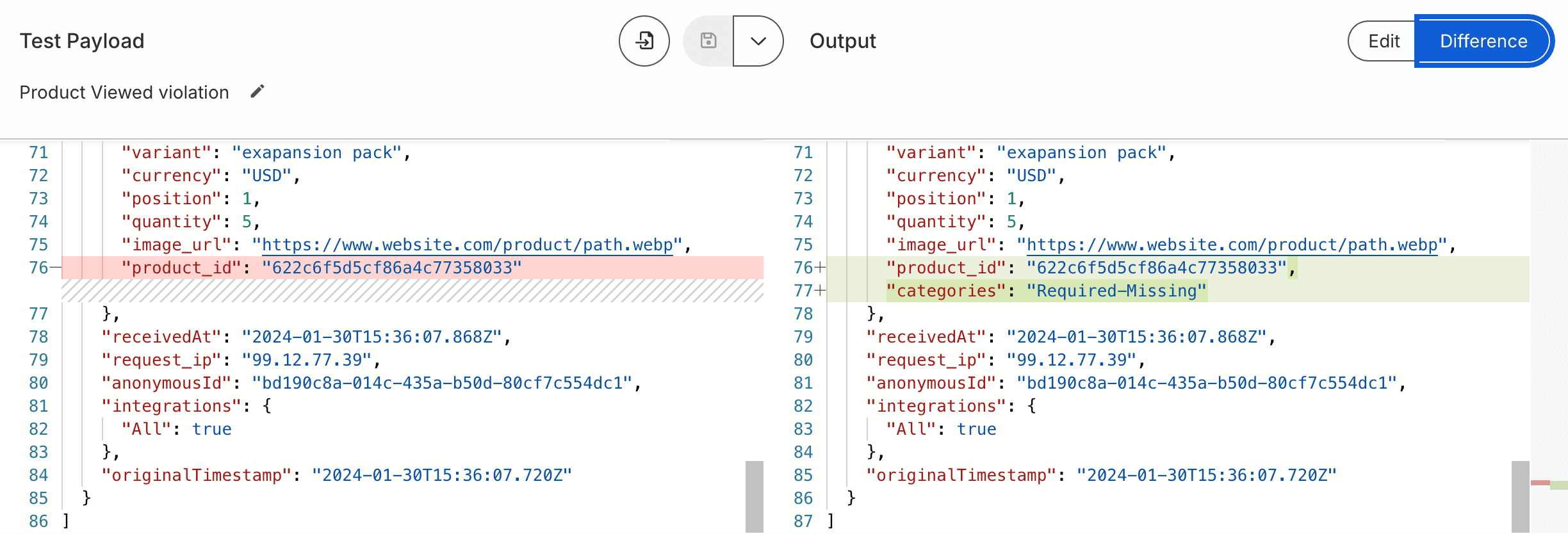

Send error diagnostics to downstream systems: Fixing bad data is helpful, but many times payloads are missing critical information altogether. To mitigate problems with analytics and marketing automation, you can forward diagnostic information from the violationErrors object in the payload so that teams can use it to quickly fix analytics and exclude users from marketing automations that would otherwise send them incomplete or inaccurate messages that rely on the missing data.

Interactive demo: Transformations

Check out the interactive demo below to see Transformations in action.

Getting started

When you use customer data for business-critical applications, bad data can wreak havoc. If you want to turn your customer data into competitive advantage, it’s not enough to simply keep it out of your downstream systems. You have to fix it. Traditional fixes involve high-cost tradeoffs and take time.

Transformations allow you to quickly implement resilient fixes for bad data, so you can spend less time wrangling and more time helping your business drive revenue.

To learn more about how you can use Transformations to ensure data quality, check out the docs. To see Transformations along with the rest of our Data Quality toolkit, request a demo with our team or sign up for our webinar featuring data quality expert Chad Sanderson on guaranteeing quality customer data from the source.

Published:

January 31, 2024

Data standardization: Why and how to standardize data

When teams cannot rely on their data, reporting slows down and decision-making becomes riskier. Learn how standardization addresses these challenges by creating a consistent structure that data can follow from the moment it enters your system.

Understanding data maturity: A practical guide for modern data teams

The journey to data maturity isn't about having the most sophisticated tools or even the biggest volume of data. It's about taking the right steps at the right time to unlock value from the data you have.

How to create a strong data management strategy

In this article, we'll walk through the core elements of a modern data management strategy, explore common pitfalls, and share best practices to help you build a scalable framework.