Feeling stuck with Segment? Say 👋 to RudderStack.

Machine learning model training

What is Behavioral Analytics?

What is Diagnostic Analytics?

The Difference Between Data Analytics and Statistics

Data Analytics vs. Business Analytics

What is Data Analytics?

The Difference Between Data Analytics and Data Visualization

Data Analytics vs. Data Science

Quantitative vs. Qualitative Data

Data Analytics Processes

Data Analytics vs. Data Analysis

Data Analytics Lifecycle

Data Analytics vs Business Intelligence

What is Descriptive Analytics?

What Is Google Analytics 4 and Why Should You Migrate?

Google Analytics 4 and eCommerce Tracking

GA4 Migration Guide

Understanding Data Streams in Google Analytics 4

GA4 vs. Universal Analytics

Understanding Google Analytics 4 Organization Hierarchy

Benefits and Limitations of Google Analytics 4 (GA4)

What are the New Features of Google Analytics 4 (GA4)?

What Is Customer Data?

Collecting Customer Data

Types of Customer Data

The Importance of First-Party Customer Data After iOS Updates

CDP vs DMP: What's the difference?

What is an Identity Graph?

Customer Data Analytics

Customer Data Management

A complete guide to first-party customer data

Customer Data Protection

What is Data Hygiene?

Difference Between Big Data and Data Warehouses

Data Warehouses versus Data Lakes

A top-level guide to data lakes

Data Warehouses versus Data Marts

Best Practices for Accessing Your Data Warehouse

What are the Benefits of a Data Warehouse?

Data Warehouse Architecture

What Is a Data Warehouse?

How to Move Data in Data Warehouses

Data Warehouse Best Practices — preparing your data for peak performance

What is a Data Warehouse Layer?

Key Concepts of a Data Warehouse

Data Warehouses versus Databases: What’s the Difference?

Redshift vs Snowflake vs BigQuery: Choosing a Warehouse

How to Create and Use Business Intelligence with a Data Warehouse

How do Data Warehouses Enhance Data Mining?

Data Security Strategies

How To Handle Your Company’s Sensitive Data

What is a Data Privacy Policy?

How to Manage Data Retention

Data Access Control

Data Security Technologies

What is Persistent Data?

Data Sharing and Third Parties

Cybersecurity Frameworks

What is Consent Management?

What is a Data Protection Officer (DPO)?

What is PII Masking and How Can You Use It?

Data Protection Security Controls

What is Data Integrity?

Data Security Best Practices For Companies

Subscribe

We'll send you updates from the blog and monthly release notes.

What Is Data Integration?

Data integration poses significant challenges for businesses striving to be more data-centric. Even straightforward scenarios can encounter data integration difficulties. As complexity, data volume, and the need for real-time processing increase, these challenges become more pronounced. Many companies face issues with isolated data silos, fragile APIs, and the difficult task of constructing and sustaining data pipelines that deliver quality data to the appropriate end-users for informed business decision-making.

Integrating data often entails merging older systems with newer technologies. Moreover, as business requirements evolve and new use cases emerge, there can be a rapid expansion and shift in the variety of data sources and destinations. Developing and maintaining a streamlined architecture with effective automations and connectors to manage these complexities in a scalable way demands a strategic approach to data integration.

A well-executed data integration plan is crucial for achieving efficient and precise data analysis, especially in the context of data warehouses.

What is Data Integration?

Data integration is the practice of merging and consolidating data from various disparate sources into a unified dataset. Its primary objective is to ensure that users consistently access and receive data spanning various subjects and structural formats. This process serves the purpose of fulfilling the informational requirements of all applications and business processes. As the need for sharing existing data and incorporating big data integration grows, data integration assumes a pivotal role within the broader data management framework.

Frequently, data tends to become dispersed across a multitude of tools and databases employed by a business in its daily operations. Moreover, these tools and databases are often administered by distinct departments, such as Sales overseeing the CRM, Customer Support relying on a help desk system, and the Analytics team interfacing with the data warehouse. Additionally, the data housed within these tools exhibits variations in formatting, encompassing structured, semi-structured, and unstructured data.

This scenario inevitably leads to the formation of data silos, where each team possesses only a fragmented view of the overall business functioning. This is where data integration assumes a crucial role.

Data integration serves to harmonize this otherwise fragmented data, creating a singular, authoritative source of information. This integration can be executed through ETL (Extract, Transform, Load) pipelines, which extract data from disparate sources, standardize its format, and subsequently load it into a central repository, such as a data warehouse or data lake.

Data integration architects are responsible for developing software programs and platforms dedicated to data integration. These tools streamline and automate the process of connecting and directing data from source systems to target systems. This objective is achieved through a variety of data integration techniques, which encompass:

1. Extract, Transform, and Load (ETL): This method involves gathering copies of datasets from disparate sources, harmonizing them, and subsequently loading them into a data warehouse or database.

2. Extract, Load, and Transform (ELT): Data is loaded into a big data system in its raw form and transformed at a later stage to suit specific analytics purposes.

3. Change Data Capture (CDC): In real-time, CDC identifies data changes within databases and applies these changes to a data warehouse or other relevant repositories.

4. Data Replication: This technique involves replicating data from one database to others, ensuring synchronization for operational use and backup purposes.

5. Data Virtualization: Data from various systems is virtually amalgamated to create a unified view, eliminating the need to load data into a new repository.

6. Streaming Data Integration: This real-time data integration method continuously combines different data streams, feeding them into analytics systems and data stores as they are generated.

Methods of Data Integration

Data integrations can take many forms. Teams can integrate data via IPaaS solutions that help sync data across operational systems, they can integrate data in their customer data platform (CDP), or they can use ETLs and data pipelines to mesh data together in their pre-processing. Each of these integration methods, in one way or another, take data of various formats from different sources and sync them together.

In turn, analysts can easily work with said data to answer critical questions and help the business truly become data driven. This also impacts other end users. For example, operations teams can further integrate the data into other systems, such as a CRM, to streamline business processes. What’s more, with Reverse ETL, data integrated in the warehouse can easily be operationalized and delivered to downstream teams where they can use it to drive optimizations, increase engagement, and deliver a better customer experience.

The History of Data Integration

Data integration, a pivotal component in the realm of data management, began its journey in the 1980s with the advent of ETL (extract, transform, load) tools. These tools revolutionized data handling by automating the transfer process and enabling interoperability between disparate databases. The essence of this interoperability lay in the ability of these systems to exchange information, despite varying data structures, necessitating the transformative step in ETL.

A significant milestone in the history of data integration was achieved in 1991 with the University of Minnesota's development of the first data integration system, the Integrated Public Use Microdata Series (IPUMS). This groundbreaking system amalgamated thousands of population databases and international census data, showcasing the immense potential of large-scale data integration in collating vast datasets.

As the digital landscape evolved, data integration faced increasingly complex challenges. The exponential growth in data volume and velocity, coupled with the widespread adoption of the internet and cloud-based technologies, added layers of complexity. Particularly notable was the burgeoning demand for real-time data access, facilitated through event streaming, while still maintaining traditional batch processing techniques.

In response to these evolving challenges, the field of data integration has witnessed the emergence of novel technologies and methodologies. The use of APIs has become commonplace, serving as vital communication bridges between diverse tools and data sources. Concurrently, the concept of data lakes has gained traction, offering scalable storage solutions for the vast quantities of data generated in the digital age. Additionally, data hubs have emerged as central platforms for managing, unifying, and processing data. These hubs not only ensure data security and compliance but also streamline the handling of complex data workflows.

The evolution of data integration reflects a continuous adaptation to the dynamic demands of data management, highlighting its critical role in shaping the way businesses and organizations harness the power of data.

Application Integration vs Data Integration

The development of data integration technologies emerged as a vital response to the widespread use of relational databases, catering to the essential need for efficient data movement among them. This primarily involved handling 'data at rest', or data that is not actively moving from system to system. In a contrasting approach, application integration focuses on the real-time management and integration of live, operational data across multiple applications.

Application integration is designed to ensure that applications, often developed independently and with distinct purposes, can function cohesively. This necessitates maintaining data consistency across various data repositories, effectively managing the integrated workflow of multiple tasks executed by different applications, and providing a unified interface or service. This unified platform facilitates access to data and functionalities from these distinct applications, mirroring some of the core objectives of data integration, such as uniformity and accessibility.

A prevalent method for achieving application integration in today's digital ecosystem is through cloud data integration. This approach involves a suite of tools and technologies that establish connections between various applications, allowing for the real-time exchange of data and processes. It is characterized by its ability to provide access across multiple devices, either through a network or via the internet. This method offers significant advantages in terms of scalability, flexibility, and the ability to handle large volumes of data efficiently, making it a cornerstone in modern application integration strategies.

As the digital landscape continues to evolve, both data and application integration technologies are becoming increasingly vital in enabling businesses to harness the full potential of their data assets and application portfolios. These integrations facilitate seamless data flow and operational efficiency, proving essential in the age of digital transformation and the drive towards more interconnected and intelligent business systems.

Why is Data Integration Important?

Businesses aiming to stay competitive and relevant are increasingly adopting big data, recognizing both its advantages and challenges. Data integration is a key element in leveraging these vast datasets, enhancing various aspects such as business intelligence, customer data analytics, data enrichment, and the provision of real-time information.

One of the primary applications of data integration services and solutions lies in the realm of business and customer data management. Through enterprise data integration, businesses can channel integrated data into data warehouses or utilize virtual data integration architectures. This supports a range of functions including enterprise reporting, business intelligence (known as BI data integration), and sophisticated enterprise analytics.

Integrating customer data is especially crucial as it equips business managers and data analysts with a comprehensive view of vital metrics. These include key performance indicators (KPIs), financial risks, customer profiles, manufacturing and supply chain operations, regulatory compliance, and other critical business process elements.

Furthermore, data integration holds significant importance in healthcare. By amalgamating data from various patient records and clinical sources, it aids medical professionals in diagnosing conditions and diseases more effectively. It achieves this by organizing disparate data into a cohesive and informative view, fostering insightful conclusions. In the insurance sector, adept data acquisition and integration heighten the precision of claims processing. It also ensures the consistency and accuracy of patient records, including names and contact details. The ability of different systems to exchange and make use of information, a concept known as interoperability, is a vital aspect of this process.

Data integration is equally crucial in sectors beyond healthcare, such as in the financial industry. In this context, integrating data from various customer accounts and transaction histories enables financial analysts and advisors to provide more accurate financial advice and risk assessments. By consolidating information from diverse banking systems and financial instruments into a coherent overview, financial institutions can extract valuable insights for decision-making. Effective data gathering and integration also enhance the precision in processing loan applications and managing investment portfolios. Moreover, it ensures consistent and accurate maintenance of customer profiles, including personal and transactional data. The seamless exchange and utilization of information across different financial systems, known as interoperability, is a key component in this process, enabling more efficient and customer-centric banking services.

What is Big Data Integration?

Big data integration encompasses sophisticated processes designed to handle the immense volume, diversity, and rapid influx of big data. This integration brings together data from various sources like web content, social media interactions, machine-generated data, and Internet of Things (IoT) outputs into a unified structure.

Platforms dedicated to big data analytics demand scalability and high-performance capabilities, underscoring the necessity for a unified data integration platform. Such a platform should facilitate data profiling and ensure data quality, ultimately aiding in generating comprehensive and current insights about an enterprise.

Services in big data integration adopt real-time integration methods, which enhance traditional ETL (Extract, Transform, Load) technologies by adding a dynamic dimension to the continuous flow of streaming data. The best practices for real-time data integration take into account the ever-changing, transient, and often imperfect nature of data. This involves rigorous initial testing and stimulation, the adoption of real-time systems and applications, the implementation of parallel and coordinated data ingestion engines, and building resilience at each stage of the data pipeline to prepare for potential component failures. Additionally, standardizing data sources through APIs is crucial for deriving more profound insights.

Through these practices, big data integration services effectively manage the complexities of modern data environments, enabling organizations to harness the full potential of their data assets.

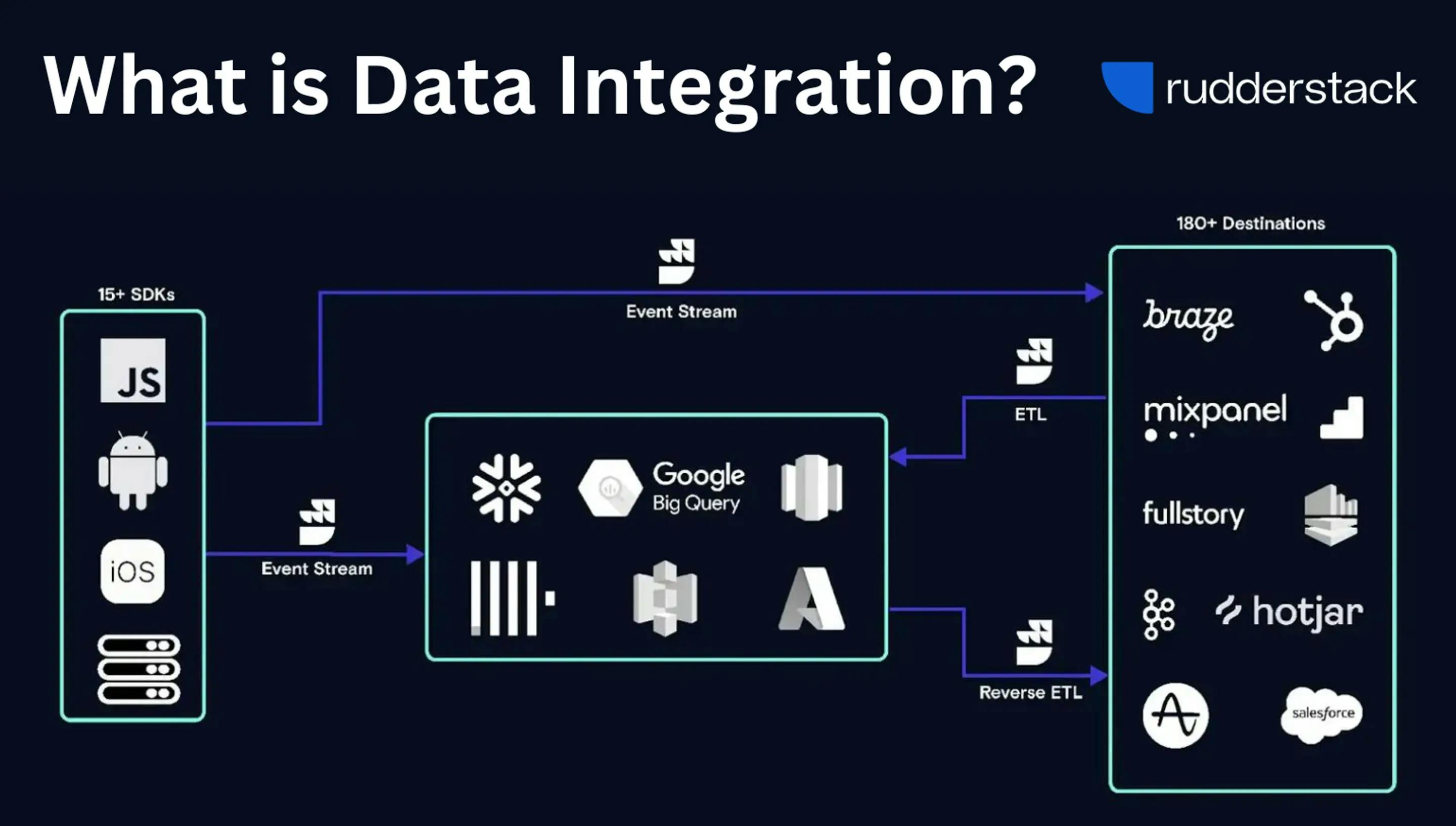

The role of the CDP in Data Integration

A Customer Data Platform (CDP) like RudderStack is an open-source platform that provides data pipelines, enabling the collection of data from various applications, websites, and SaaS platforms. It facilitates the integration of this data into your warehouse and business tools, allowing for real-time event data capture and loading to your warehouse/data lake. RudderStack's approach focuses on being warehouse-first, developer-focused, and production-ready, offering seamless integration with over 180 cloud and warehouse destinations. This capability enhances data collection, unification, and activation, making it an essential tool for effective data integration strategies.

What Data Integration looks like in a CDP

To address data integration challenges and create detailed customer profiles that empower all teams in your organization, a Customer Data Platform (CDP) is crucial. RudderStack, a leading Segment alternative, assists you in collecting extensive customer data, irrespective of your data maturity level or the absence of a data warehouse. It enables impactful use of this data across your organization.